GIST Scientists Unveil Strategies To Make Self-Driven Vehicles Passenger-Friendly

Researchers investigated a method of providing timely explanations to increase passengers’ sense of safety and confidence in automated vehicles

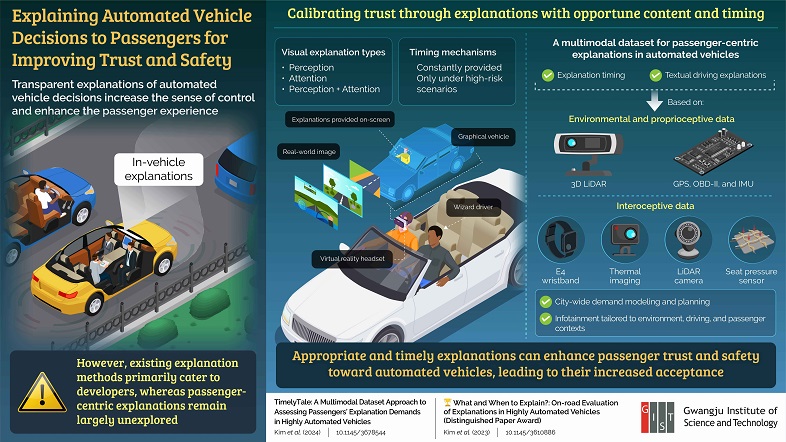

Automated vehicles promise to improve urban mobility, but passenger trust remains a challenge. Providing timely, passenger-specific explanations for automated vehicle decisions can bridge this trust gap. To address this, researchers from the Gwangju Institute of Science and Technology introduced TimelyTale, a novel dataset designed to capture real-world driving scenarios and passenger explanation needs. It would be nice to see this multimodal dataset approach for in-vehicle explanation generation improving passengers’ trust and confidence in automated vehicles.

The integration of automated vehicles promises several benefits for urban mobility, including increased safety, reduced traffic congestion, and enhanced accessibility. Automated vehicles also enable drivers to engage in non-driving related tasks (NDRTs) like relaxing, working, or watching multimedia en route. However, widespread adoption is hindered by passengers’ limited trust. To address this, explanations for automated vehicle decisions can foster trust by providing control and reducing negative experiences. These explanations must be informative, understandable, and concise to be effective.

Existing explainable artificial intelligence (XAI) approaches majorly cater to developers, focusing on high-risk scenarios or comprehensive explanations, potentially unsuitable for passengers. To fill this gap, passenger-centric XAI models need to understand the type and timing of information needed in real-world driving scenarios.

Addressing this gap, a research team, led by Professor SeungJun Kim from the Gwangju Institute of Science and Technology (GIST), South Korea, investigated the explanation demands of automated vehicle passengers in real-road conditions. They then introduced a multimodal dataset, called TimelyTale, which includes passenger-specific sensor data for timely and context-relevant explanations. “Our research shifts the focus of XAI in autonomous driving from developers to passengers. We have developed an approach for gathering passenger’s actual demand for in-vehicle explanations and methods to generate timely, situation-relevant explanations for passengers,” explains Prof. Kim.

Their findings are available in two studies published in the Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies on September 27, 2023, and September 09, 2024. The authors were awarded the ‘Distinguished Paper Award’ at UbiComp 2024 for their pioneering study titled ‘What and When to Explain?: On-road Evaluation of Explanations in Highly Automated Vehicles’.

The researchers first studied the impact of various visual explanation types, including perception, attention, and a combination of both, and their timing on passenger experience under real driving conditions by utilizing augmented reality. They found that the vehicle’s perception state alone improved trust, perceived safety, and situational awareness without overwhelming the passengers. They also discovered that traffic risk probability was most effective for deciding when to deliver explanations, especially when passengers felt overloaded with information.

Building upon these findings, the researchers developed the TimelyTale dataset. This approach includes exteroceptive (regarding the external environment, such as sights, sounds etc.), proprioceptive (about the body’ positions and movements), and interoceptive (about the body’s sensations such as pain etc.) data, gathered from passengers using a variety of sensors in naturalistic driving scenarios, as key features for predicting their explanation demands. Notably, this work also incorporates the concept of interruptibility, which refers to the shift in focus of the passengers from NDRTs to driving-related information. The method effectively identified both the timing and frequency of the passenger’s demands for explanations as well as specific explanations that passengers want during driving situations.

Using this approach, the researchers developed a machine-learning model that predicts the best time for providing an explanation. Additionally, as proof of concept, the researchers conducted city-wide modeling for generating textual explanations based on different driving locations.

“Our research lays the groundwork for increased acceptance and adoption of autonomous vehicles, potentially reshaping urban transportation and personal mobility in the coming years,” remarks Prof. Kim.